LLM Providers - OpenAI (GPT-5, GPT-4o), Claude & Custom Models

The Tolgee platform allows you to set up custom LLM models. You can do so in the organization section (Cloud version) or globally in the server configuration (Self-hosted version).

In this guide, we'll show setup via the UI, but the fields are mostly identical when you use the server configuration.

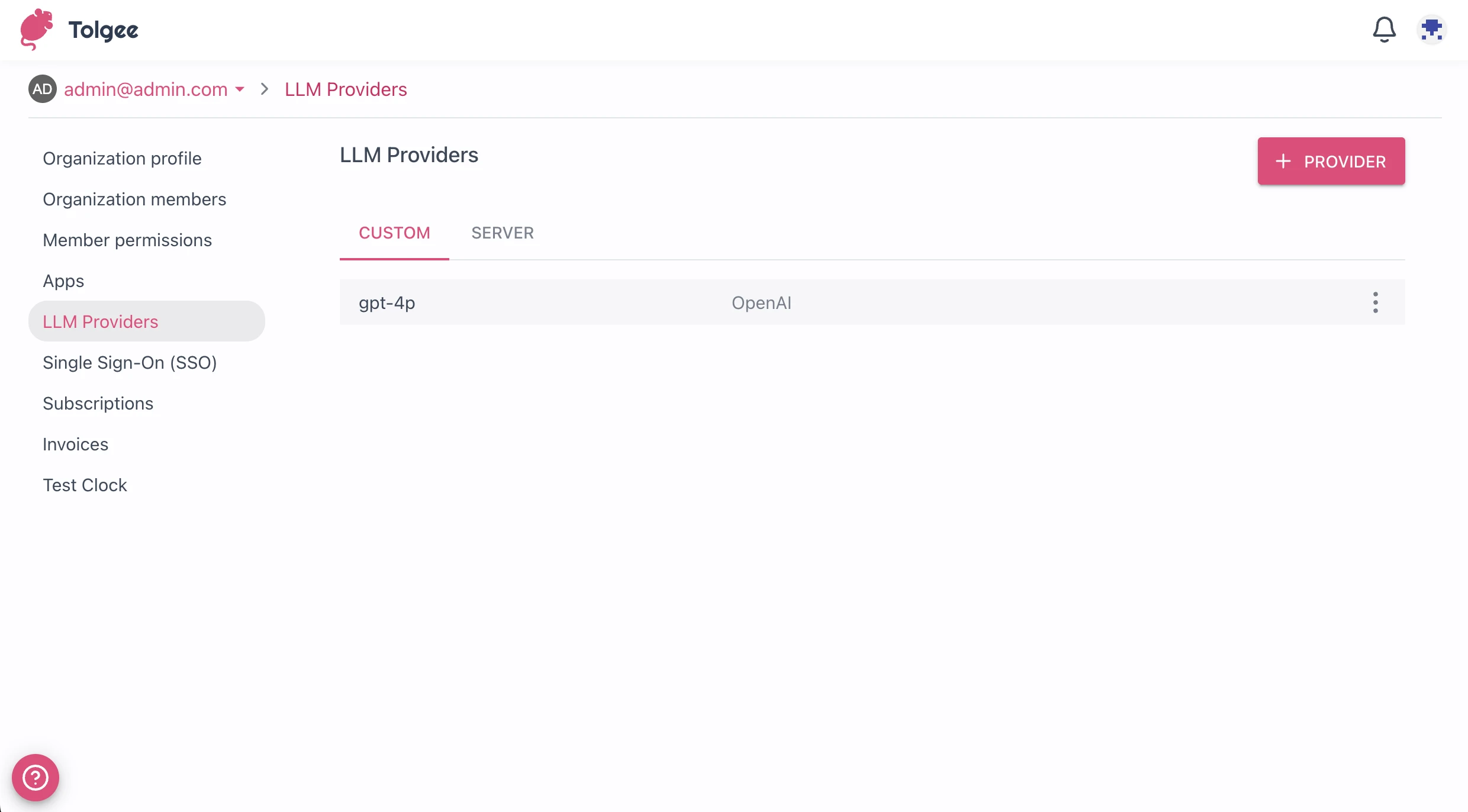

Setup via Organization Settings via UI (Cloud)

- Go to Organization settings

- Select LLM Providers in the side menu

Here, you can see a list of custom providers (configuration is per Organization). You can also see

server-configured providers in the Server tab. Server providers cannot be modified via UI.

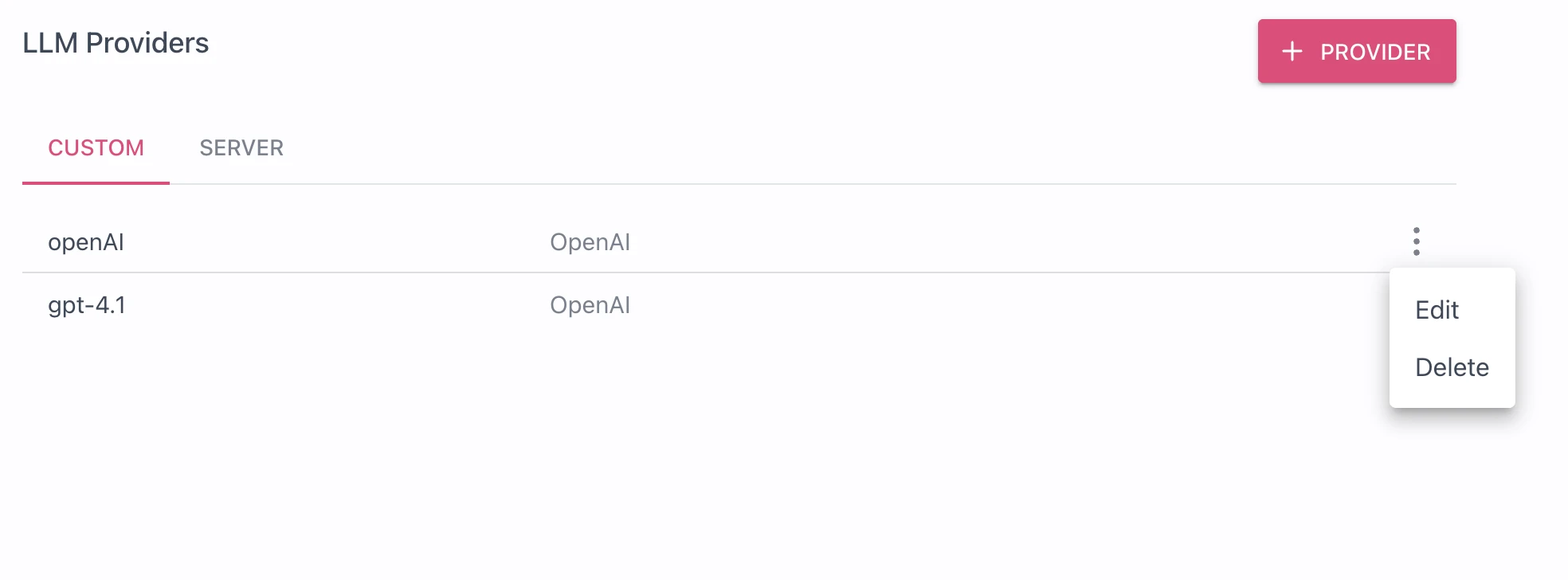

Deleting or editing provider

Click on the ⋮ icon next to a provider.

From here, you can select Edit or Delete.

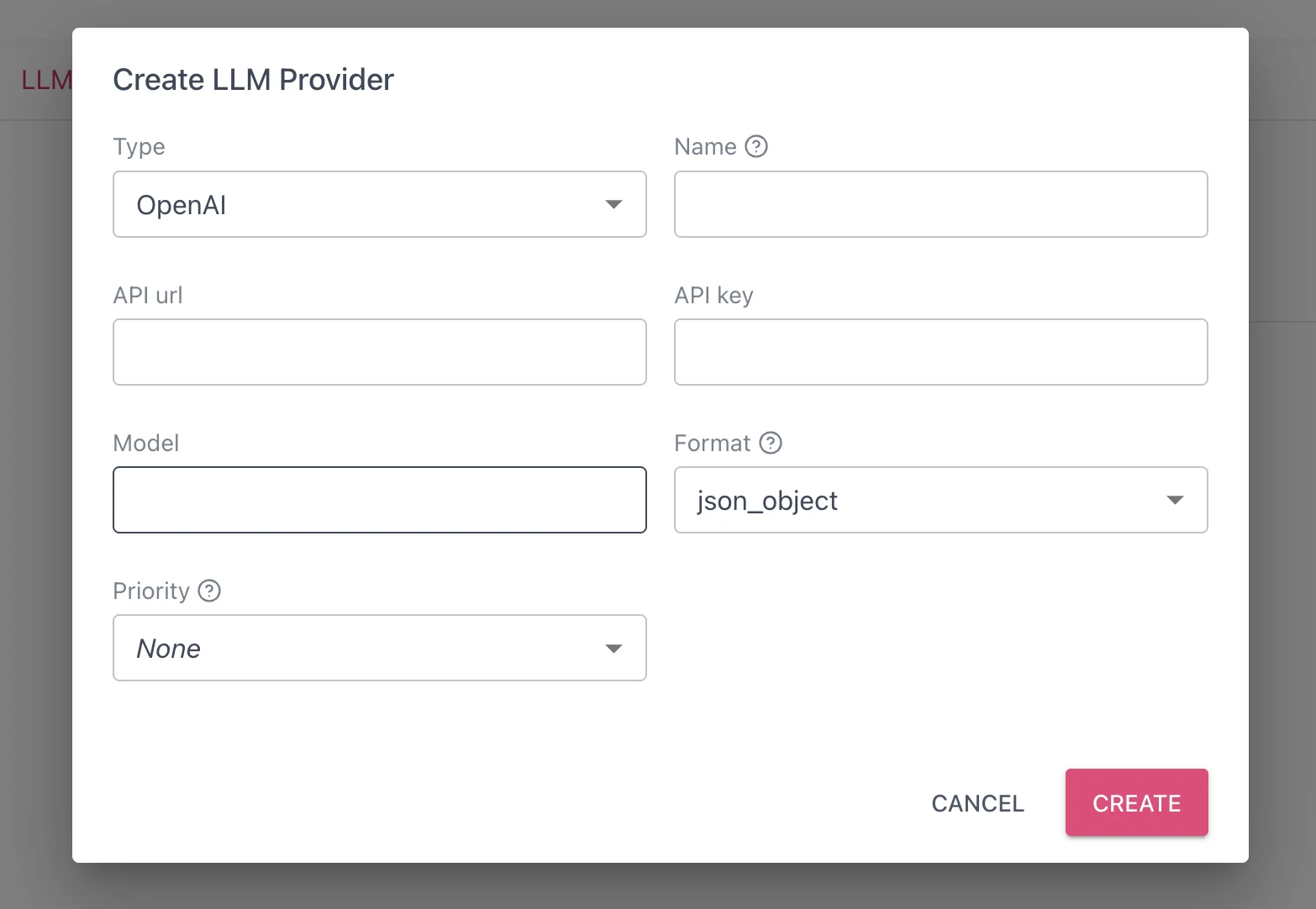

Add Custom LLM Provider

Click the + Provider button to open a provider dialog.

AI Provider Naming and Load Balancing

The provider name is displayed in the UI, as well as the provider's ID.

If you name a custom provider with the same name as a server provider, you will override it.

If you specify multiple providers with the same name, Tolgee will assume it's the same provider with multiple deployments, so the platform will load balance between them.

You can also specify Priority:

- LOW - deployment will only be used for batch operations

- HIGH - deployment will only be used for suggestions

This is useful for load-balancing and avoiding rate limits. Batch operations are repeatable, but they usually handle more traffic. On the other hand, the translation suggestion in the UI is not repeatable. That's why it makes sense to give suggestions higher priority.

Supported LLM Provider Types

Provider type selects a type of API; it influences what other configuration fields are available based on the API requirements.

OpenAi (ChatGPT)

It is designed to work with the official OpenAI API.

API url: e.g., "https://api.openai.com"API key: specify your OpenAI API key without theBearerprefixModel: specify your model name (e.g., "gpt-4o")Format: some older models don't support structured output, but for newer ones, select "json_schema"

Using OpenAi API of LM studio

LM studio uses OpenAI compatible API, so you can configure Tolgee to use it.

Example configuration:

API url: e.g., "http://localhost:1234" (default when running lm studio locally)API key: emptyModel: e.g., "gemma-3-4b-it-qat" (name of the model in the lm studio)Format: some older models don't support structured output, but for newer ones, select "json_schema"

Azure OpenAi

It is designed to work with the Azure version of OpenAI API.

API url: URL of your Azure resourceAPI key: resource keyDeployment: specify your AI Foundry deployment (e.g., gpt-4o)Format: some older models don't support structured output, but for newer ones, select "json_schema"

Anthropic Claude

It is designed to work with the Anthropic API.

API url: e.g., "https://api.anthropic.com"API key: specify your Anthropic API key ("x-api-key" header)Model: specify your model name (e.g., claude-sonnet-4-20250514)

Google AI (Gemini)�

It is designed to work with the Google AI API.

API url: e.g., "https://generativelanguage.googleapis.com"API key: specify your Google Console API key ("api-key" header)Model: specify your model name (e.g., gemini-2.0-flash)

Self-hosted server configuration

In the same way, as through UI, you can also configure llm providers through server configuration, when you are self-hosting.

Configuration field tolgee.llm.providers,

is an array of providers with the following fields:

tolgee:

llm:

providers:

- name: string

type: OPENAI | OPENAI_AZURE | TOLGEE | ANTHROPIC | GOOGLE_AI

api-url: string

api-key: string

model: string

deployment: string

format: string (e.g., "json_schema" )

priority: null | LOW | HIGH

enabled: true | false (default: true)

Fill in the necessary fields according to the documentation above; you can leave other fields empty.